- Simplifying Complexity

- Posts

- AI Weekly Recap (Week 35)

AI Weekly Recap (Week 35)

The most important news and breakthroughs in AI this week

Good morning, AI enthusiasts. The cost of building robots is crashing fast. Nvidia just dropped a new developer kit for robotics priced like a high-end laptop. Meanwhile, Google just unveiled a photo editor so powerful it’s already being called the best in the world.

Plus: The most important news and breakthroughs in AI this week

Google’s new Gemini Flash 2.5 Image (aka nano-banana in testing) lets users perform multi-step image edits while preserving character consistency. It also supports style blending, image mixing, and intelligent scene adjustments using natural language prompts.

→ Multi-turn edits let users layer changes without losing consistency

→ Blends styles, objects, and images while reasoning about scenes

→ Priced at $0.039 per image via API & Google AI Studio, cheaper than competitors

🧰 Who is this useful for:

Digital artists & illustrators looking for smarter AI editing tools

App developers building image-generation platforms

Filmmakers & content creators needing consistent character visuals

AI enthusiasts experimenting with multi-step image generation

Try it now → http://gemini.google.com

Anthropic is piloting a “Claude for Chrome” extension that lets its AI assistant take controlled actions in users’ browsers. The initiative focuses on studying and mitigating security risks like prompt injections seen in other AI browsers.

→ Uses permissions and safety measures to reduce vulnerabilities

→ Builds on previous “Computer Use” agentic tool with improved capabilities

→ Responds to security issues observed in Perplexity’s Comet browser

🧰 Who is this useful for:

AI researchers studying agentic systems and security

Developers building AI-powered browser tools

Security specialists tracking prompt injection risks

Power users curious about AI-assisted browsing

Try it now → https://claude.ai/chrome

OpenAI’s Codex now comes with an IDE extension, GitHub code review integration, local-cloud task handoff, and a rebuilt CLI powered by GPT-5, enabling seamless agentic coding and smarter code management.

→ IDE Extension & Sign-in: Works in VS Code, Cursor, Windsurf; access without API keys via ChatGPT plan

→ Local-Cloud Handoff: Execute tasks in the cloud while keeping local state, continuing seamlessly in your IDE

→ GitHub & CLI Upgrades: Auto PR reviews, agentic coding in CLI with new UI, commands, image inputs, and web search

🧰 Who is this useful for:

Developers and engineers coding across IDEs and GitHub

Teams looking to automate code reviews and validations

AI enthusiasts exploring agentic coding tools

Enterprises managing cloud-local coding workflows

Try it now → https://github.com/openai/codex

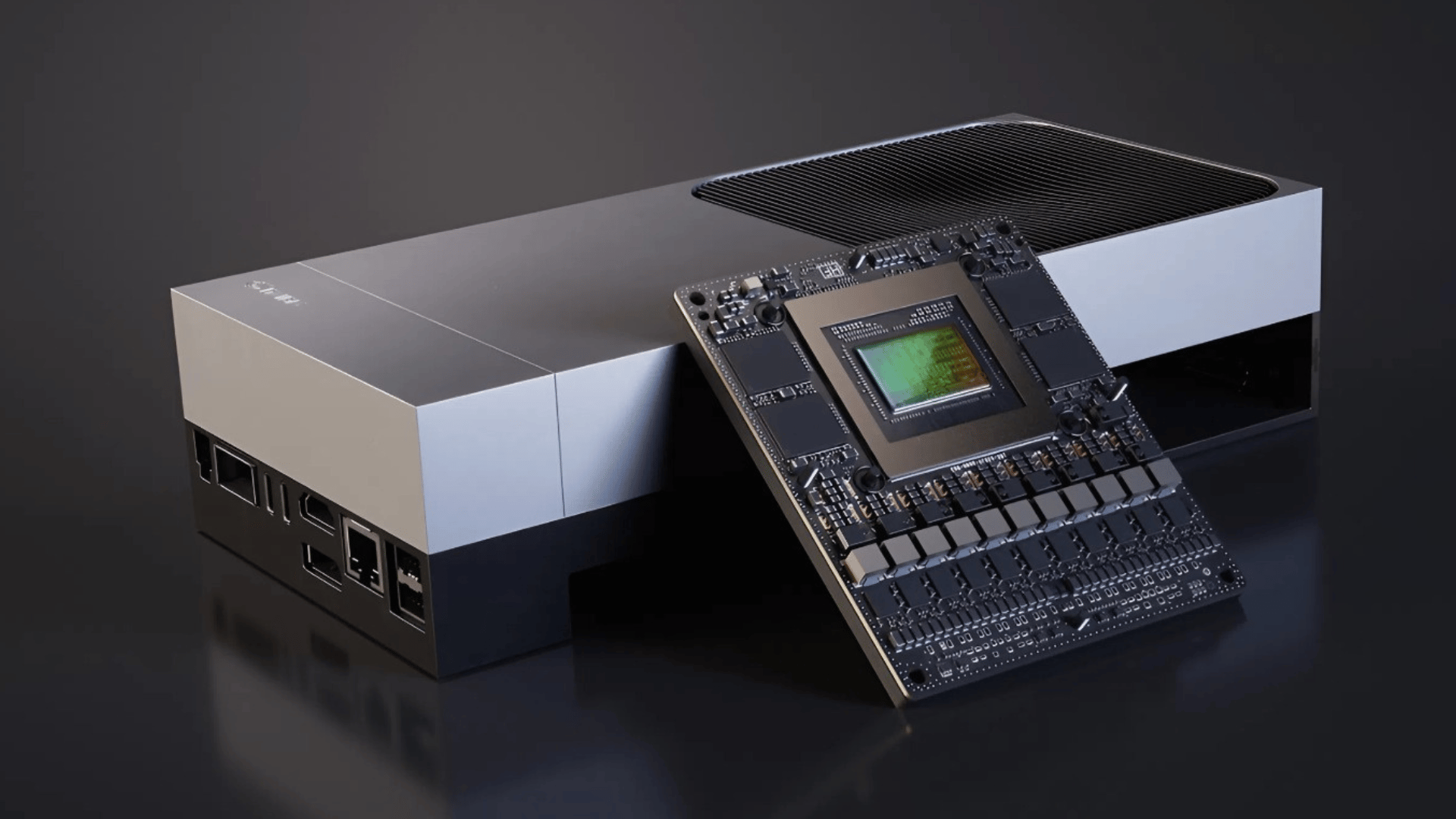

Nvidia’s latest robotics chip, the Jetson AGX Thor, is now available as a developer kit for $3,499. Designed as a “robot brain,” it enables companies to prototype robots and run generative AI models for advanced perception and reasoning.

→ Powerful chip: 7.5× faster, 128GB memory for AI models

→ Developer & production kits: $3,499 dev kit, $2,999 bulk production modules

→ Robotics growth: Used by Amazon, Meta, Boston Dynamics; Nvidia targets expansion

🧰 Who is this useful for:

Robotics developers and AI engineers

Companies prototyping humanoid or autonomous robots

Investors tracking Nvidia’s AI and robotics growth

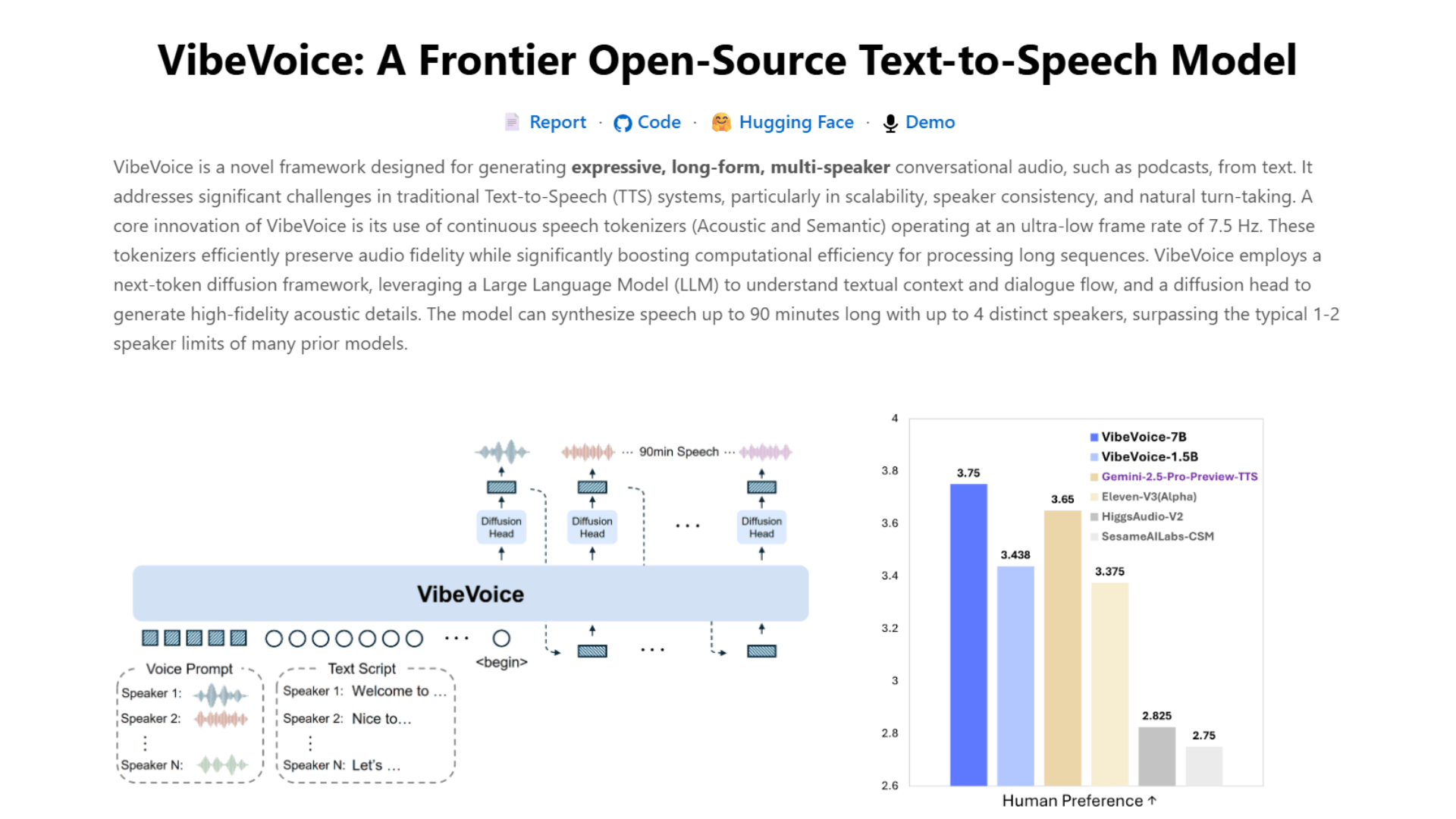

Microsoft’s new VibeVoice-1.5B generates long-form, high-fidelity audio with up to four distinct speakers, supporting multi-person conversations and background music integration.

→ Generates up to 90 minutes of continuous speech in a single session

→ Supports up to 4 speakers with natural turn-taking and voice consistency

→ Achieves 3,200× audio compression, making streaming and storage efficient

🧰 Who is this useful for:

Podcasters creating multi-host shows

Audiobook producers needing expressive, long-form narration

Virtual assistant developers seeking natural, human-like voices

Game designers & interactive media creators

Try it now → https://github.com/microsoft/VibeVoice

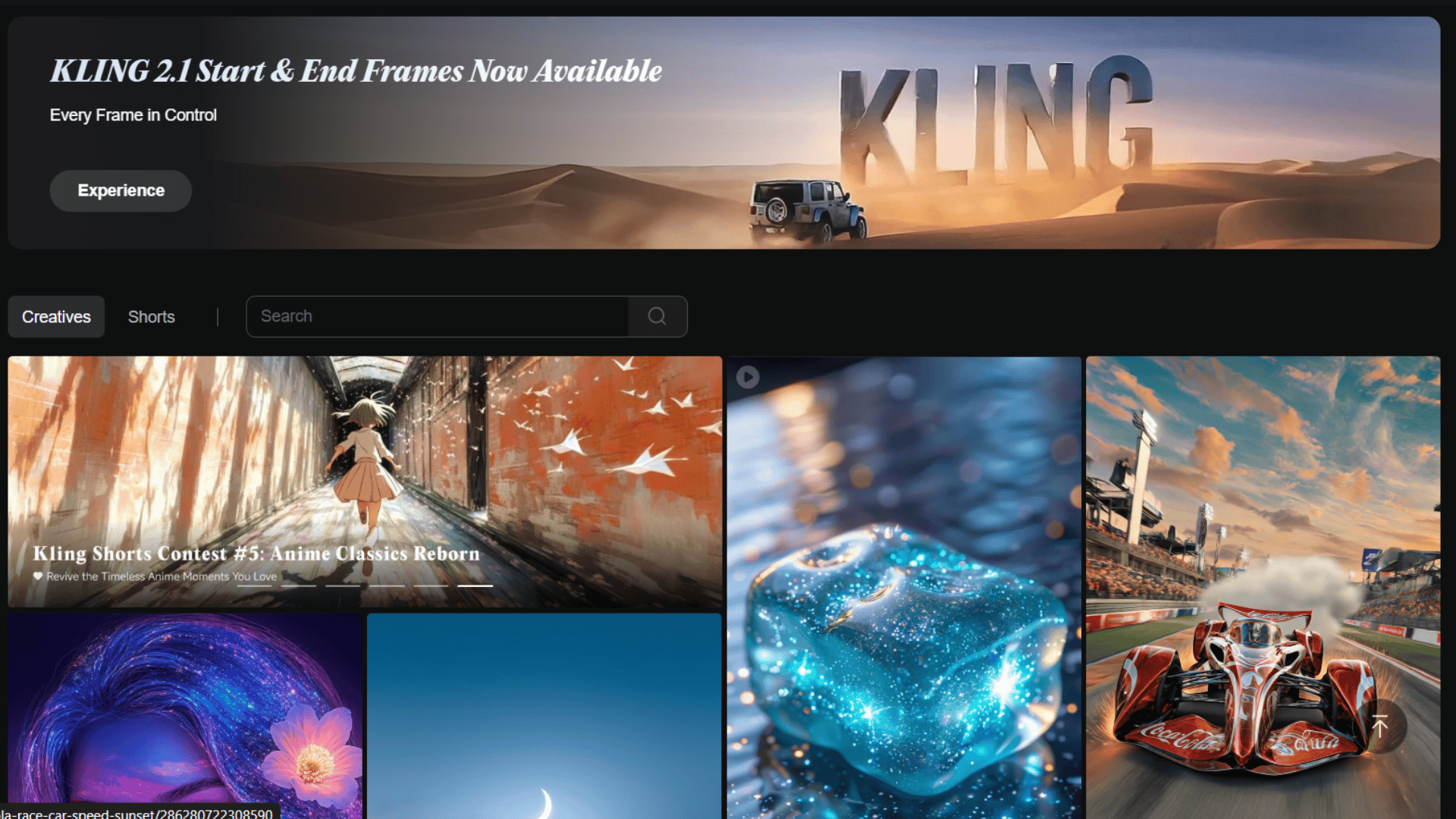

KLING 2.1 introduces Start & End Frames, letting users define precise entry and exit points for every video, with major performance improvements.

→ Defines precise start & end frames for granular control

→ Delivers 235% performance boost over version 1.6

🧰 Who is this useful for:

Video creators producing cinematic clips

Animators needing frame-accurate control

Content developers generating synchronized video & speech

Filmmakers seeking smooth camera moves

Try it now → https://app.klingai.com/global/

That's it! See you tomorrow

- Dr. Alvaro Cintas